Part way through the HCX 4.5 release notes it mentions the above with the following note:

“In HCX 4.5, HCX can be deployed within a single vCenter Server to enable the Bulk migration. This is useful for cluster-to-cluster migrations in environments where the vendor compatibility or other requirements for cluster-to-cluster vMotion cannot be satisfied“

This, to me, is a really, really cool feature. But what’s the use case? How do we set it up? And how does it work? I’ll try and explain…

The x86 CPU race over the past decade has until recently predominantly been dominated by Intel. However AMD have recently gained a lot of traction in the server market with their EPYC CPUs which pack a high core counts and other good bits such as a lots of PCI lanes and fast cache into a single socket. Historically, AMD also had a decent run of success in the server market with Opteron until Intel started their domination. There are many reasons companies choose one over the other, but moving between them in a virtualised environment presents a challenge – there is no live migration between the architectures. I’ll quote what an engineer posted when this came up internally:

At a low level, Intel and AMD use entirely different CPU instructions for certain very important things (syscalls and hypercalls, both on perf-critical paths), in a way that cannot be changed at runtime.

Theoretically: you could emulate all that stuff and get compatibility with maybe a 50% across-the-board slowdown. In practice, nobody wants that.

Let’s pretend for a second that you are running VCF and have a Workload Domain with 10 Intel based hosts in a cluster and you’ve commissioned 10 hosts with AMD CPUs in another cluster, and you want to move your workloads to the new hardware. There are many ways to move the VMs over and all present unique challenges. You could shut them all down, then cold vMotion them to the new hosts and power them back on. This relies on shared storage and networking between the clusters. And depending on the number of VMs you have, it’s going to take a long time unless you figure out some way of automating it and get the application owners on board. There’s also 3rd party software that can do this. And now HCX can do it too.

Typically with HCX we have a source site (Connector) and a destination site, known as the ‘Cloud site’, each having their own vCenter servers. But if we are managing all hosts with the same vCenter server, how can I have both HCX managers registered with it, when there is a 1:1 mapping between them? Well, it turns out that there are two scenarios (that I know of) that support both the Connector and the Cloud Manager to be registered with the same vCenter and this is one of them. I’ll cover the second method in another blog post soon. Hint – it’s OSAM.

The first step is to deploy HCX Cloud Manager, there are instructions in the HCX Documentation. Once this has been done, the Manager is activated, updated, and registered with vCenter & NSX Manager, move on to deploying the HCX Connector, and again activating, updating, and registering to the vCenter Server. I won’t go into the architecture too in depth as it depends on your environment. Once both are deployed, we need to do a few first steps (all of these are in the HCX documentation):

- Pair the Managers

- Create Network and Compute Profiles at the source and destination HCX Servers and ensure that Bulk Migration is a selected service

- Create a Service Mesh

I mention above that you should deploy the Cloud Manager first. The reason for this is that HCX will deploy a plugin to vSphere where you can configure migrations. The Connector and Cloud plugins will overwrite one another, and since migrations are always initiated at the source, we want the Connector plugin to be the one with the active vSphere registration.

Once everything is configured and the Service Mesh is deployed, we start a new migration exactly how we would if we were migrating to the cloud, except for the following:

- The VM will not retain its MAC address. See VMware KB article 219

- The source and destination folders must be different, or you get an error similar to: ‘A VM with name hcxtest1 already exists in the destination inventory. Please rename the VM and retry.‘ To be clear, this is a vCenter limitation where you cannot have two VMs with the same name in the same folder.

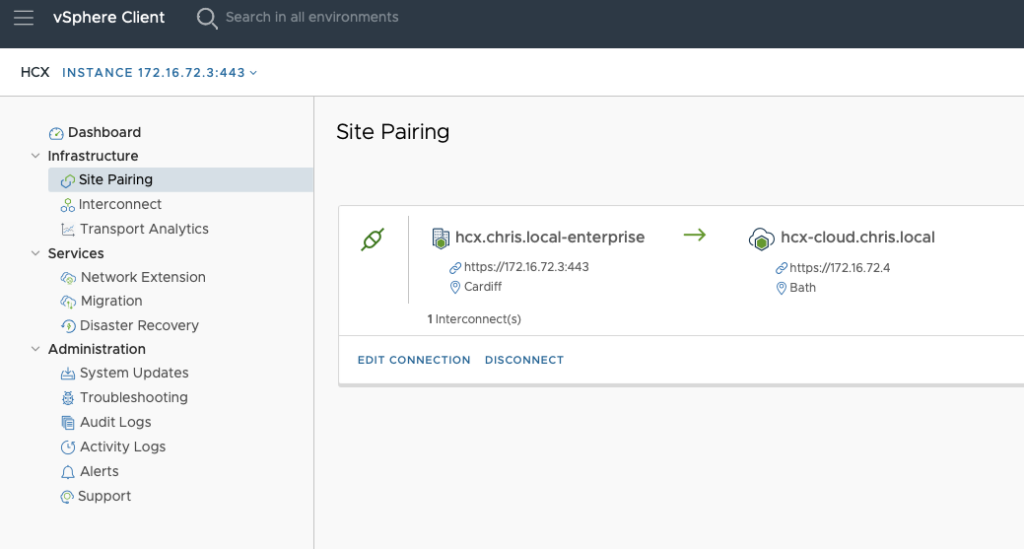

Here’s the site pairing, showing both managers on the same subnet:

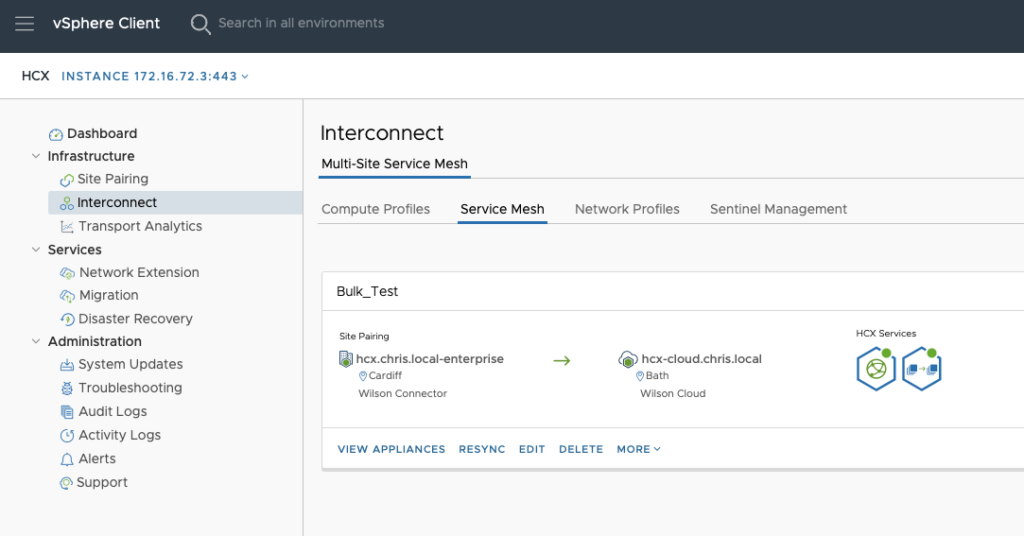

Here’s the Service Mesh:

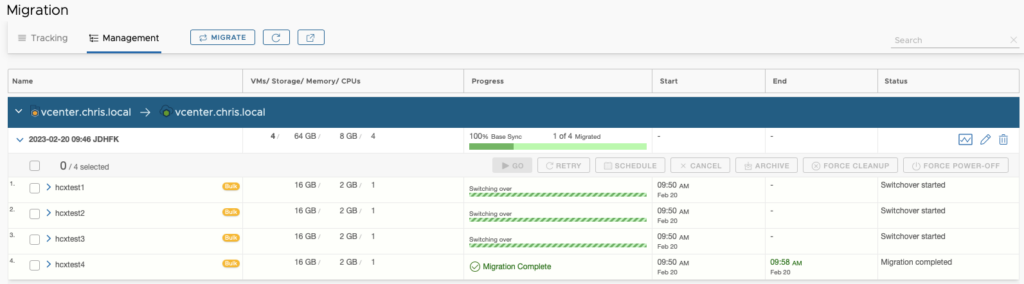

Here’s the view during a migration:

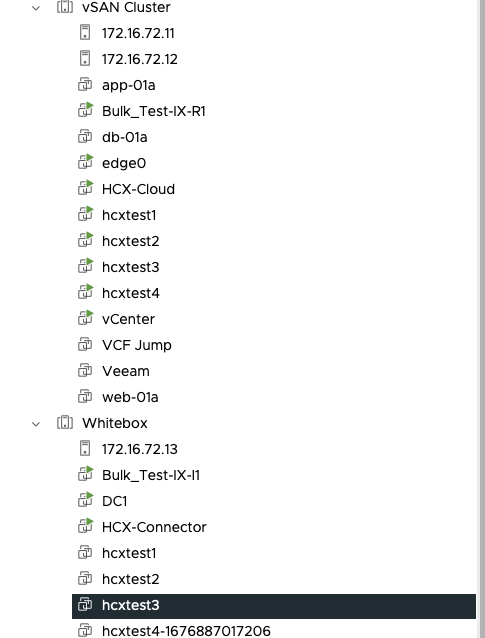

And finally a view of the VMs in vCenter as they move from the source Cluster (called ‘Whitebox’ in my example) to the destination (vSAN Cluster):

There will be a small overlap of VMs having the same in your inventory, this is temporary and in keeping with how bulk migration works, it will be cleaned up by the migration workflow as the original VMs have the POSIX timestamp appended to their name.

This is a great feature and will allow rapid migration from one Cluster to another where live vMotions are not possible due to CPU architecture constraints.

Just as a reminder, the VMs must not be in the same folder when you are selecting the migration and they cannot keep their MAC address. If you need to move VMs and retain their MAC address for whatever reason, you’ll have to do it another way.

Finally, this architecture at present is only supported on-prem; you cannot deploy this in VMC on AWS as an example, as the solution it is contingent on the ability to register the HCX Connector with the vCenter server which requires full administrative privileges which we do not have in public clouds.

Thanks for reading.

2 thoughts on “HCX Bulk Migration for Single vCenter Server Topologies”