HCX OS Assisted Migration (OSAM for short) is a VMware tool where VMs hosted on either KVM or Hyper-V or can be migrated into vSphere. It’s designed to work at scale and can do 50 concurrent migrations per Service Mesh and will scale up to four Service Meshes; allowing up to 200 total parallel migrations. It can be a faster way of moving workloads compared to using vCenter Converter as an example.

An interesting question I see asked a few times is whether migrating Azure native VMs will work? After all Azure uses Hyper-V* so there’s no reason why it wouldn’t? Providing you can meet the network requirements you may think it’s possible.

Before I go into detail about what works and what doesn’t, I just want to highlight two things:

- *Azure Hypervisor is built specifically for Azure. It may be related to Hyper-V and might even share code, but they are two different hypervisors. Therefore Hyper-V != Azure

- From the documentation, we can see that only KVM and Hyper-V are supported. There is no mention of Azure Hypervisor

Disclaimer: Based on the above, this is not officially supported. Proceed at your own risk, there may be unexplained behaviour or potential data loss!

If you want to migrate native Azure VMs, use vCenter Converter, as it will likely have much higher success rates.

Nevertheless, I like to tinker and try things and wanted to try it out. I’m lucky enough to have a Visual Studio Professional subscription and this gives me (from memory) ~£50 a month to try things in Azure. I won’t go into the Azure specifics, but I built a small lab by creating the following:

- Resource Group

- VNET and subnet for the VMs to use

- Two testing VMs (1x Ubuntu 18 LTS, 1x Server 2016)

- Virtual network gateway

- Local network gateway

- Site to Site VPN

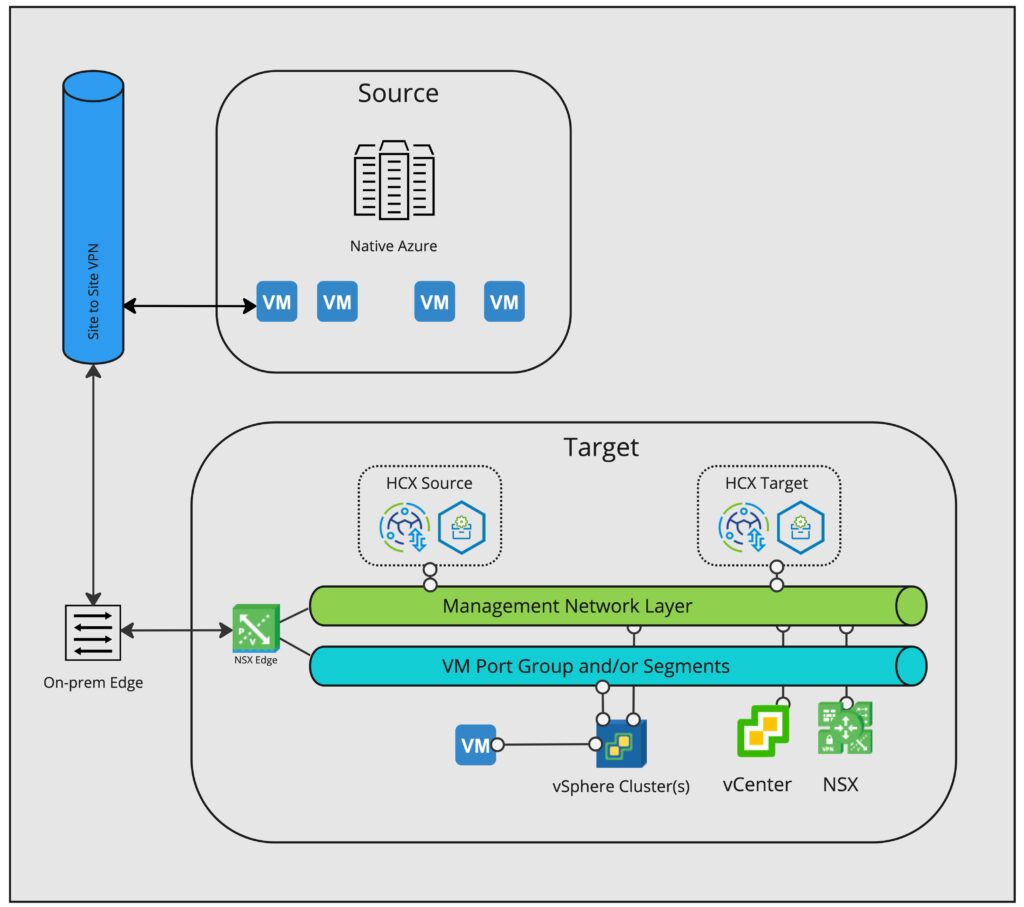

The Site to Site VPN connects to my UniFi Dream Machine SE, and my lab network is defined in the Azure Local Network Gateway. On my UDM SE, I created a Site to Site VPN to Azure, and specified the Azure subnet as the remote subnet. This allows local network access between my lab and the Azure VMs. From a HCX perspective, it follows a similar logic if we were migrating from Hyper-V/KVM using single site architecture which I recently blogged about. From a high level it looks something like this:

Server 2016

First up was a Server 2016 VM, the size I chose was Standard DS1 v2. After it deployed, I logged into the VM console and enabled remote access plus ICMP response in the firewall. I confirmed network connectivity from my lab with a ping to its private IP address, before attempting to remote desktop to the VM but the connection was struggling. Having experience of this behaviour in the past I thought it might be MTU related. After a bit of reading, Azure attempts to fragment packets at 1400 bytes and the Site to Site VPN runs at 1400. Unfortunately there isn’t an easy way on the UDM SE to drop the MTU of a particular VPN and I didn’t want to change global MSS settings in case it interfered with my lab or home network, so I used the Azure tool of sending a command to the VM which dropped the MTU, something similar to:

netsh interface ipv4 set subinterface Ethernet mtu=1400 store=persistentThere are other ways to do this, such as opening up RDP to the internet (I highly recommend using Azure Security Groups if doing this) and doing it on the adaptor itself within network control panel. Once this was changed, RDP was working well.

If your VPN device supports lowering the VPN interface MTU and/or global MSS clamping, then do that and leave the VM as is. However if it doesn’t, you must lower the guest MTU to 1400 otherwise the Sentinel agent will struggle to send data to the Sentinel Gateway and the job will fail.

I then copied over the OSAM Sentinel Installer, ran it on the VM and then followed the usual steps to start migrating the VM. It succeeded without much issue! It’s worth noting that the VM will have to be re-IP’d post migration, as I’m not aware of any method to bridge a L2 network to Azure.

I may try out other instance sizes in the future, or other builds of Windows Server, but testing at scale is for another day!

Ubuntu 18 LTS

I was pretty happy that Server 2016 worked so I figured that Ubuntu would be straightforward. I was wrong! To save you from reading on, it does work, albeit with some major caveats which quite frankly make it unviable. The reason is likely due to the way the cloud-init image is deployed in Azure, or due to my Linux knowledge not stretching far enough to make it work. I had to do a few things to even get the migration to not fail within a few minutes.

Before I go further, I just wanted to reiterate that this is not officially supported, therefore HCX is not equipped to deal with Azure VMs, hence a lot of manual intervention is required.

First off, if necessary you need to lower the MTU in the same way you do with Windows (unless your site to site VPN supports lowering the MTU or MSS!). To do so, create a new file called /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg with one line: network: {config: disabled}. Then you can modify the Netplan file /etc/netplan/50-cloud-init.yaml without it being overwritten and change the MTU, then do sudo netplan apply.

One thing about the Netplan config is that it does include driver: hv_netvsc and a fixed MAC Address – changing these values made little difference to the networking in vSphere which I’ll explain later on.

From the HCX docs, OSAM doesn’t support fs type auto, looking in /etc/fstab we see:

cat /etc/fstab

# CLOUD_IMG: This file was created/modified by the Cloud Image build process

UUID=36e225f7-d24a-4257-8594-1a146437f6ac / ext4 defaults,discard 0 1

UUID=0693-EB12 /boot/efi vfat umask=0077 0 1

/dev/disk/cloud/azure_resource-part1 /mnt auto defaults,nofail,x-systemd.requires=cloud-init.service,_netdev,comment=cloudconfig 0 2You can just comment out the bold part as it is is a temporary file system. Then reboot.

After changing /etc/fstab, the VM migrated but it failed during the fixup process, fully consuming a single vCPU after powering on:

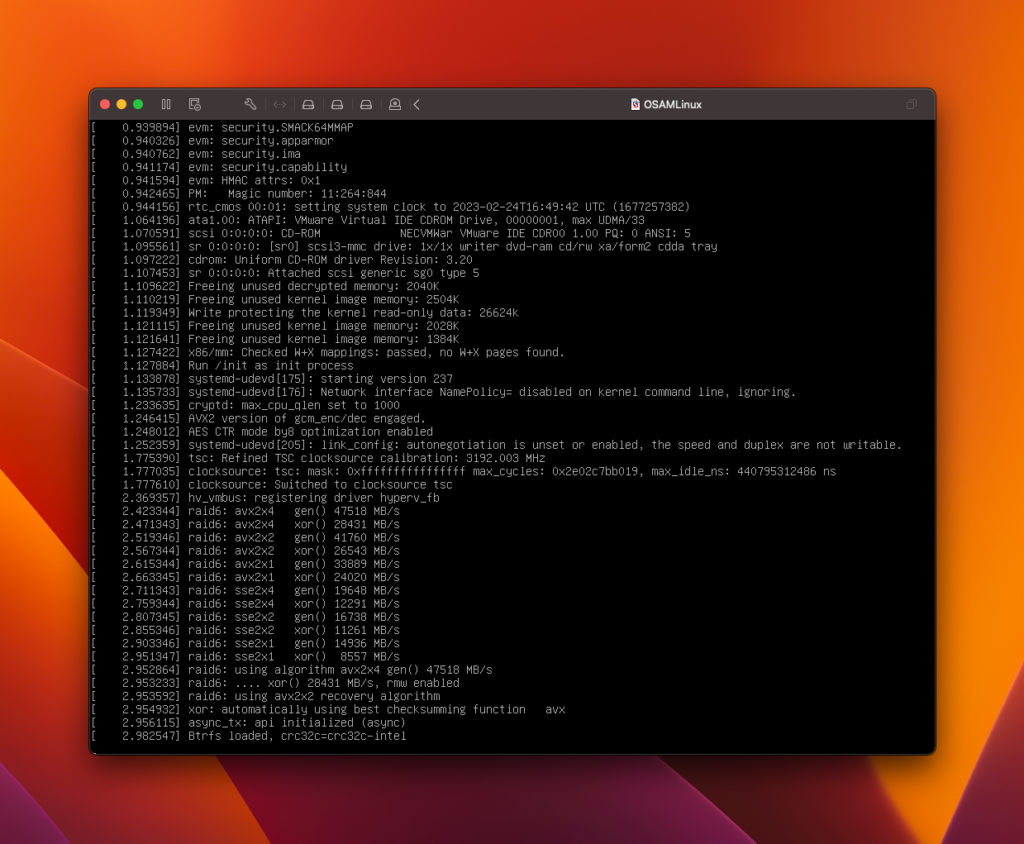

I did some research and quickly saw that this is to do with grub, specifically that Azure uses a serial console, and vSphere doesn’t. This is what grub looks like prior to migration:

cat /etc/default/grub.d/50-cloudimg-settings.cfg

# Windows Azure specific grub settings

# CLOUD_IMG: This file was created/modified by the Cloud Image build process

# Set the default commandline

GRUB_CMDLINE_LINUX="$GRUB_CMDLINE_LINUX console=tty1 console=ttyS0 earlyprintk=ttyS0"

GRUB_CMDLINE_LINUX_DEFAULT=""

# Set the grub console type

GRUB_TERMINAL=serial

# Set the serial command

GRUB_SERIAL_COMMAND="serial --speed=9600 --unit=0 --word=8 --parity=no --stop=1"

# Set the recordfail timeout

GRUB_RECORDFAIL_TIMEOUT=30

# Set the grub timeout style

GRUB_TIMEOUT_STYLE=countdown

# Wait briefly on grub prompt

GRUB_TIMEOUT=1I removed the entries highlighted above in bold. Then do sudo update-grub and reboot. There are probably better ways to do this, however this worked for me and allowed testing to proceed.

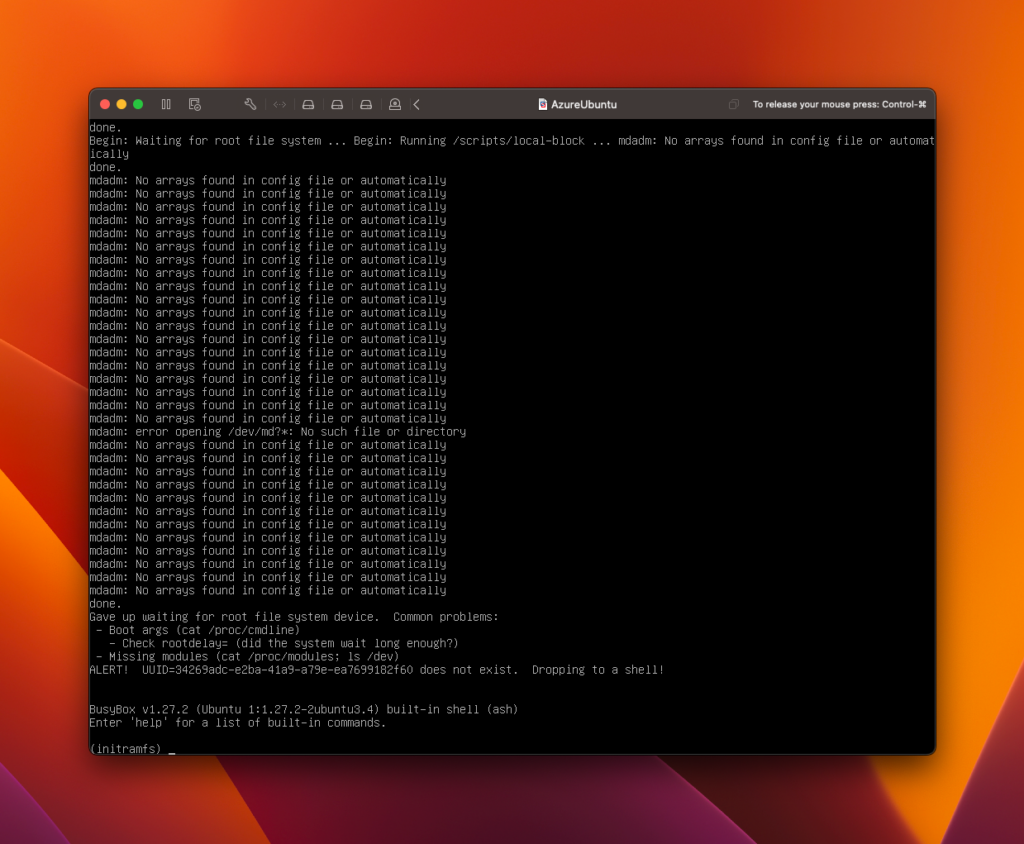

The VM will migrate into vSphere, Photon will boot and mount the migrated disk to do HCX OSAM fixup operations. However while it looks like it will work, the VM fails to find a bootable device after trying to boot into the Ubuntu OS:

(yes the VM name is different to the earlier screenshot, I tried this a few times!)

Powering the VM off and changing the disk controller from Paravirtual to LSI Logic SAS allows the VM to boot, however the guest doesn’t detect the vmxnet3 adaptor which the OSAM process assigns to the VM. Creating an E1000E adaptor does give the VM network connectivity. Both behaviours are attributed to VMTools not being installed or misconfiguration in the Netplan configuration as noted above (ie, missing drivers), however tools are installed. I don’t know why this behaviour happens, but perhaps the apt sources are pointing to Azure repositories which use a different type of open-vm-tools which don’t include the VMware specific drivers. I’m not sure, that’s a little beyond my Azure/Ubuntu knowledge. There is likely some other networking related config to fix as there are some DHCP/DNS related errors being printed in the console. I do plan on revisiting this in the future to investigate more.

If anyone has any idea about the vmxnet3/network issue please let me know! I’m happy to admit that I’m a little out of my depth regarding this, I think it might be something to do with the cloud-init image which Azure use but I’m happy to hear your thoughts.

As a very quick recap, to be able to migrate a Ubuntu VM (with issues) do the following:

- Modify Netplan configuration

- Remove fs type auto in /etc/fstab

- Make changes to grub configuration

Then do sudo update-grub and reboot. After this, install the agent and migrate away, remembering to add an E1000E vNIC post migration and configuring network as appropriate to your environment.

One final note, I never got around to trying vCenter Converter but I am told it largely works without issue.

Thanks for reading.