For my home firewall I’ve recently switched from using a UniFi USG Pro to a virtual Untangle Next Generation Firewall (Untangle NG Firewall) appliance. The USG has served me well, however with no future developments for USG firmware, it’s starting to show its age somewhat. More advanced VPN support is lacking, there’s no 10 Gbps LAN, and having to configure anything not exposed in the GUI via the config.gateway.json file is a bit of a headache when you start looking into BGP peering with an NSX Edge as an example. I could go on, but that is not the point of this post! I looked at the Dream Machine lineup as well as their UXG products as a replacement, however none of them looked suitable for me so I decided to look elsewhere.

There are a few options on the table, OPNsense, pfSense, OpenWRT, plus offerings from Mikrotik and Sophos amongst others, but after some testing and a fair bit of reading I have settled on Untangle. Setup was a breeze and I had my network switched over relatively quickly. I run it on a cheap ESXi whitebox which has 2x 10 Gbps NICs and 2x 1 Gbps NICs. One 1 Gbps NIC is connected to my ONT, with the other two 10 Gbps NICs into a switch for the LAN side. It performs at my ISP line speed (900 Mbps down, 100 Mbps up) with excellent latency and really, really decent QoS using fq and codel (I would urge anyone remotely interested in networking to check out codel and bufferbloat – it really isn’t spoken about enough).

I run some VMs in a VMC on AWS environment which I have access to and with the USG I had a Site to Site IPSEC VPN connecting some home lab networks with the VMC on AWS segment. This had worked pretty well with the USG, requiring no input from myself after initial configuration. I configured the same on the Untangle appliance albeit with SHA2 rather than the the older SHA1 algorithm which the USG is limited to. Initially it worked perfectly fine and traffic was passing over the IPSEC tunnel without issue. Fast forward 30 minutes or so and I noticed I could no longer reach the VMC network. Odd. IPSEC can be a real pain to get working, especially when it involves different vendors at both sides of the tunnel (in this case Untangle to NSX-T), however usually once it’s working, it stays working. Looking at the IPSEC logs, I saw:

Jan 15 16:08:28 untangle charon: 13[ENC] generating CREATE_CHILD_SA response 90 [ N(TS_UNACCEPT) ]

Jan 15 16:08:28 untangle charon: 13[IKE] failed to establish CHILD_SA, keeping IKE_SA

Jan 15 16:08:28 untangle charon: 13[IKE] traffic selectors 172.16.72.0/24 === 172.16.73.0/24 unacceptable

Jan 15 16:08:28 untangle charon: 13[IKE] received ESP_TFC_PADDING_NOT_SUPPORTED, not using ESPv3 TFC padding

Jan 15 16:08:28 untangle charon: 13[ENC] parsed CREATE_CHILD_SA request 90 [ SA No KE TSi TSr N(ESP_TFC_PAD_N) ]

Jan 15 16:08:28 untangle charon: 13[NET] received packet: from <IP removed>[500] to <IP removed>[500] (496 bytes)

Jan 15 16:08:18 untangle charon: 06[NET] sending packet: from <IP removed>[500] to <IP removed>[500] (80 bytes)The key part being:

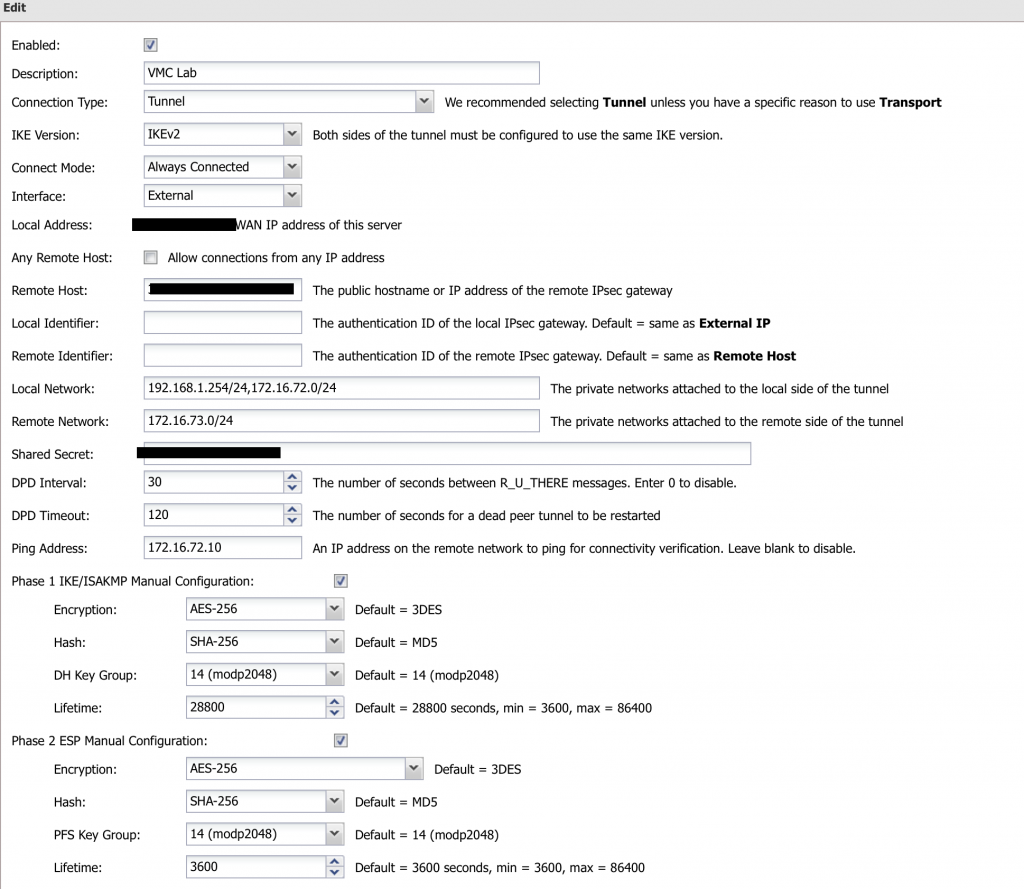

traffic selectors 172.16.72.0/24 === 172.16.73.0/24 unacceptable172.16.72.0/24 is a home network, one where all most of my lab runs, and 172.16.73.0/24 is the far side network where I have other workloads. I also had another home network advertised (192.168.1.0/24) which I use day to day and this enables me to connect to the far side VMs without using a jump host. This network remained up. In the Untangle configuration I had both local networks defined:

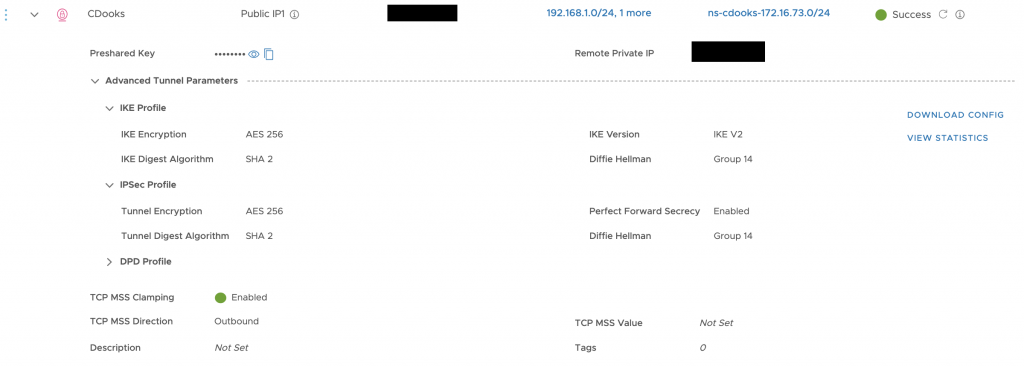

With matching configuration at the VMC/NSX-T end (it doesn’t show it, but I had 172.16.72.0/24 configured correctly):

Yet after multiple reboots, recreating the VPN, swapping the local network configuration on the Untangle, looking at logs until the words started to blur, the issue remained. Usually traffic selectors unacceptable would indicate an issue with the configuration, usually a mismatch with the source and/or destination networks. For example, an incorrect network ID or subnet mask which differs from the other end of the tunnel.

I checked the configuration, I had some friends and colleagues check the configuration, I started to change things such as dropping to SHA1, I even delved into IPSEC CLI on the Untangle but I couldn’t work out what the issue was. Thinking perhaps it may have been a bug at the VMC on AWS side, I plugged in my trusty USG again, changing the VMC side to SHA1 and everything worked as it should have. I then tried OPNsense, which also worked without issue. I was pretty confident that the issue lied with the Untangle appliance.

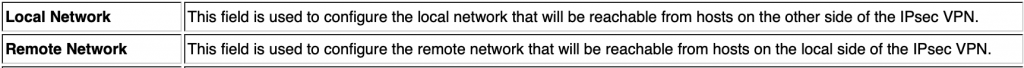

This was really starting to frustrate me and I decided to check the Untangle IPSEC wiki again and I noted something:

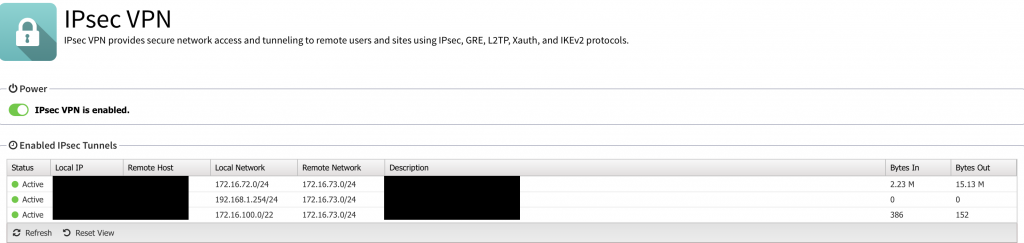

Specifically the wording around Local and Remote Network. No ‘s’, no plural. There’s also their example, which seems to show a tunnel per network pair. Although in the actual IPSEC configuration page of the appliance it states ‘the private networks attached…’ I figured there would be no harm in trying a single tunnel per local and remote network pair (I have since added a third local network for NSX segments).

Ever since I configured it this way, it’s been working flawlessly. I’ve said on my blog before that I am far from a networking expert, but I know enough to get me by. From my understanding of IPSEC and IKEv2, there is nothing stopping multiple local and remote networks being configured on the same IPSEC tunnel. In fact, it’s the ideal configuration as additional tunnels require more CPU overhead. Thankfully as you can see, not much traffic passes over the tunnel so this isn’t a big concern for me and I’m happy with it working as it is.

It’s quite bizarre, single tunnel configuration showed in the Strongswan configuration files on the Untangle appliance correctly. I’m not sure whether this is a UI bug or something to do with the particular version of Strongswan bundled with Untangle, or something else. I don’t pay for live support as I am a home user so I am not able to raise it with Untangle, however I have posted about the issue on their forum and I’ll update if there’s response from their staff.

The most frustrating thing was that it took me a while to figure out what was going on so I figured I’d write this post in the hope it helps someone else in the future.

As ever, thanks for reading.

I had this error on OPNSense and in my case, it was due to P2 being accidentally disabled. Once I enabled it, the CHILD_SA was established.